Methodology

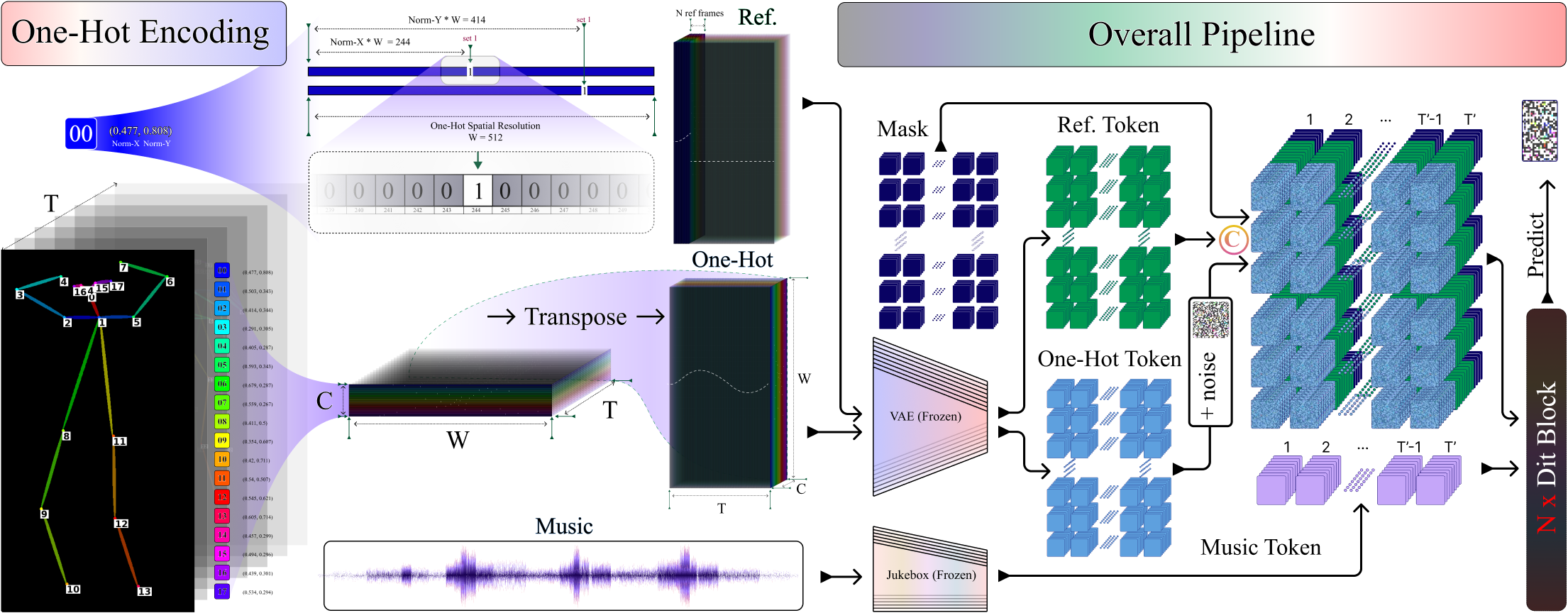

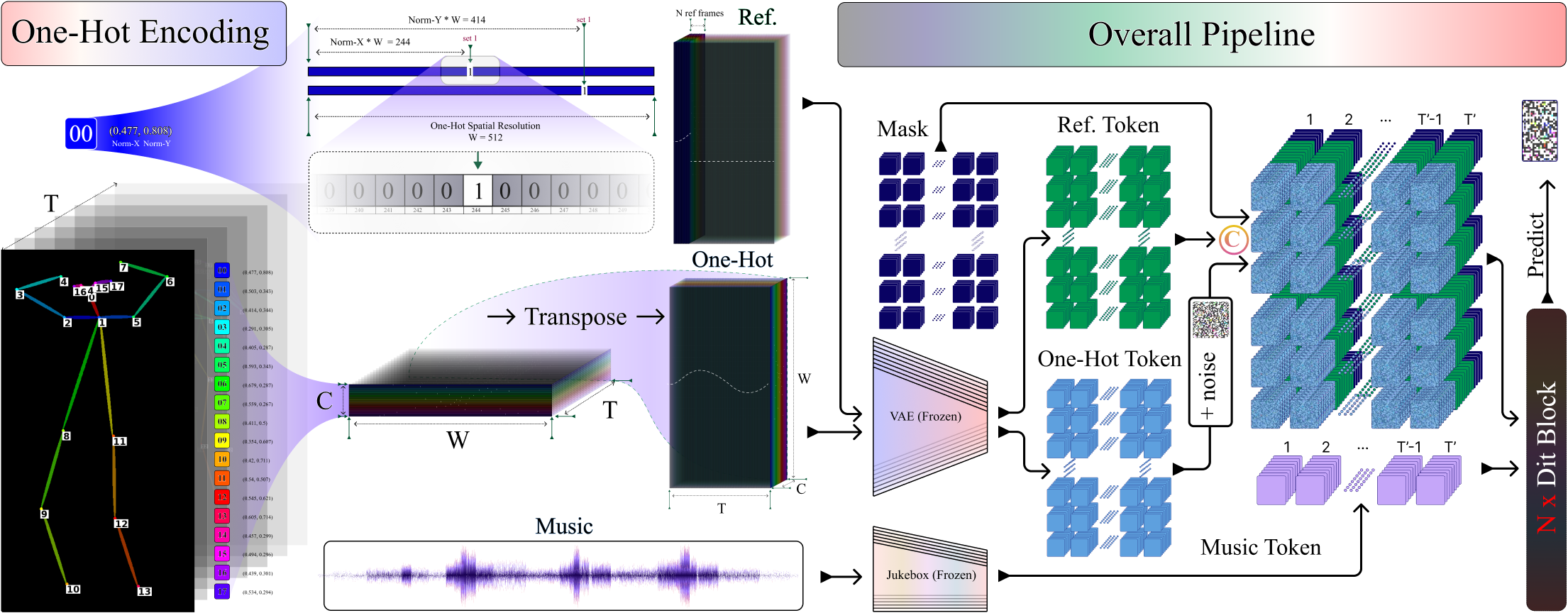

Overview architecture. Left: Illustration of One-Hot encoding of 2D poses. Right: Overall Pipeline.

Recent pose-to-video models can translate 2D pose sequences into photorealistic, identity-preserving dance videos, so the key challenge is to generate temporally coherent, rhythm-aligned 2D poses from music, especially under complex, high-variance in-the-wild distributions. We address this by reframing music-to-dance generation as a music-token-conditioned multi-channel image synthesis problem: 2D pose sequences are encoded as one-hot images, compressed by a pretrained image VAE, and modeled with a DiT-style backbone, allowing us to inherit architectural and training advances from modern text-to-image models and better capture high-variance 2D pose distributions. On top of this formulation, we introduce (i) a time-shared temporal indexing scheme that explicitly synchronizes music tokens and pose latents over time and (ii) a reference-pose conditioning strategy that preserves subject-specific body proportions and on-screen scale while enabling long-horizon segment-and-stitch generation. Experiments on a large in-the-wild 2D dance corpus and the calibrated AIST++2D benchmark show consistent improvements over representative music-to-dance methods in pose- and video-space metrics and human preference, and ablations validate the contributions of the representation, temporal indexing, and reference conditioning.

Overview architecture. Left: Illustration of One-Hot encoding of 2D poses. Right: Overall Pipeline.

Additional experimental results and demonstrations

If you find our work useful, please cite:

@misc{zhang2025reframingmusicdriven2ddance,

title={Reframing Music-Driven 2D Dance Pose Generation as Multi-Channel Image Generation},

author={Yan Zhang and Han Zou and Lincong Feng and Cong Xie and Ruiqi Yu and Zhenpeng Zhan},

year={2025},

eprint={2512.11720},

archivePrefix={arXiv},

primaryClass={cs.CV},

}